Public Sector Solutions, Strategic Program Design & Management, Data Solutions

This article was originally published by Barrett & Greene Inc.

Within two months of the release of ChatGPT by OpenAI, it attracted an estimated 100 million monthly users, setting a record for the fastest application launch. Organizations in the public sector were among these early adopters, and their use of this new tool is expanding at a feverish clip.

The 2023 Federal Chief Data Officer (CDO) Survey highlights this phenomenon in the federal government. Nearly all of the CDO respondents are now considering AI adoption, and almost one-fifth also hold AI-related titles, spurred by initiatives like President Biden’s October Executive Order, which mandated that each agency establish a chief AI officer. However, with 25% of CDOs lacking an AI strategy and only 28% prioritizing data strategies, a crucial question arises: what about good data, the cornerstone of AI?

This question doesn’t just apply to the federal government, it’s one that’s of huge consequence to the cities, counties and states that are rushing to find new and useful applications for AI-based technologies.

The Importance of Good Data

According to The National Association of State Chief Information Officers (NASCIO) 2023 Chief Information Officer survey, 73% of CIOs view generative AI and general AI and machine learning as the most impactful emerging IT areas over the next three to five years.

States, cities and counties across the country have been building and deploying AI chatbots to address constituents’ frequently asked questions and speed up the delivery and efficiency of government services. States like Indiana and Virginia have been using predictive analytics to analyze traffic and crash data, improving transit policy, and reducing traffic jams and fatalities. The California Department of Transportation recently asked IT companies to propose ideas using generative AI to improve traffic safety and reduce congestion. Other government applications of AI span the spectrum from better predicting individuals at risk of homelessness for early interventions to more quickly and precisely identifying disease outbreaks.

That’s exciting news, but there are pitfalls ahead, given the fact that, according to NASCIO, as of 2023, 69% of respondents are in the early stages of data governance, and only 27%t consider their practice mature.

In the world of AI, data plays a starring role. Think of AI as a complex machine that learns and makes decisions based on how it is trained and data it receives. The more high-quality data AI has, the better it can perform. Quality here means data that is accurate and ready to be used for decision-making. It’s also crucial that this data fairly represents different perspectives to avoid bias. This focus on fairness and ethical use of data is a pivotal part of making it trustworthy and effective in government, especially in areas where people are using AI to help inform decisions that can have major effects on people’s lives.

Effective policy recommendations in government require both accurate and comprehensible data. Before leveraging AI for decision-making, it’s crucial to first ensure that the underlying data is orderly and reliable. Premature use of AI without this foundational data integrity can result in adverse outcomes for residents.

Building the Foundation for Effective AI Use

For government entities venturing into AI, preparing your data is a critical first step. A few ways to prepare your data include:

- Implementing strong data governance policies; focusing on sharing, analysis, security, privacy, ethical usage, and other components of data management, particularly given the sensitive nature of public sector data.

- Assessing and organizing your existing data repositories to ensure they are clean, well-structured, and relevant to the problems you aim to solve with AI.

- Prioritizing data quality; accurate and reliable data is crucial for effective AI outcomes.

- Developing a framework for ongoing data management that emphasizes diversity and representativeness, to mitigate biases and ensure equitable AI applications.

Only after these foundations are in place should public sector leaders move toward developing or deploying AI solutions that impact policies or decisions.

In our work at Social Finance we help government and nonprofit leaders make their data more accessible, integrated, and useful for decision-making. A key starting place is defining the information a partner needs to achieve its goals and then building out the data infrastructure that will collect, organize, and synthesize that data. But that process should not be a one-time function. It is critical that data users have iterative conversations to assess what parts of the system are working, which are most useful, and which need to be changed. Data needs alter over time. The people using the data also come and go. And so, organizations need to adjust their systems accordingly so that the right info is being collected, analyzed, and shared in a way that is informative and understandable to its audience.

For example, we partnered with WORK Inc., a Massachusetts-based nonprofit, to build out their data infrastructure to better understand program participation and workforce outcomes. As a result, their new data tracking system supported a project expansion where payment is linked to achieving success metrics.

It’s essential to remember that the success of public sector AI initiatives hinges on the strength of data management and usage practices. By diligently auditing, refining, and enhancing the public sector’s approach to data – ensuring it’s accurate, comprehensive, and ethically governed – data leaders lay a solid foundation for AI applications. This groundwork is not just about technology; it’s about building trust, ensuring fairness, and effectively serving the public good.

Related Insight

Building the Infrastructure to Track Performance: Data-Driven Performance Management with WORK Inc.

WORK Inc. partnered with Social Finance to to develop the data infrastructure needed to better understand its clients’ journeys and use this data to guide program adjustments.

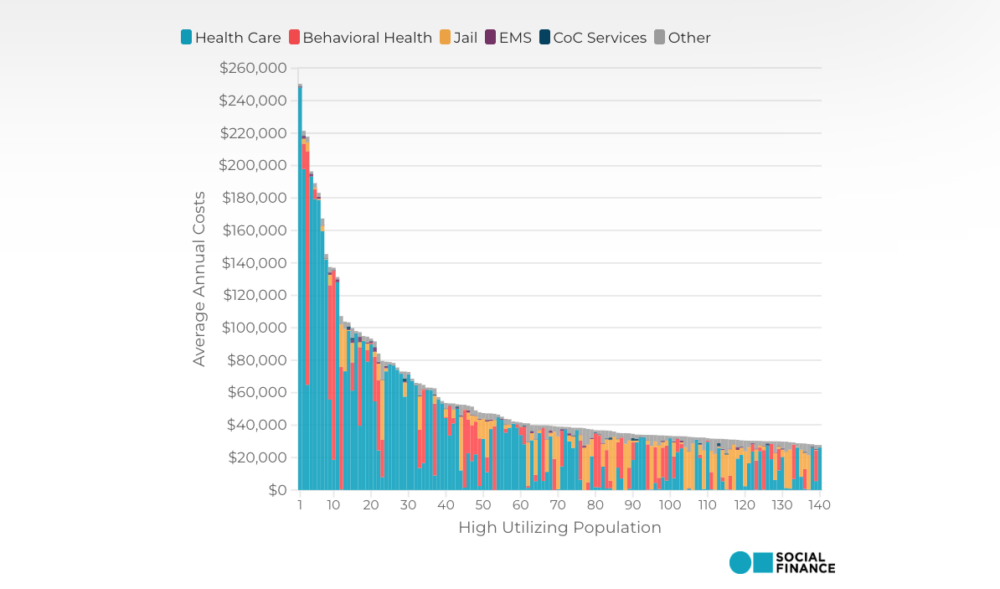

Homelessness in Ventura County: An Analysis of the Service Use and Costs of Persistent Homelessness

The costs of persistent homelessness are high—not only to the County, but to the health and wellbeing of individuals experiencing homelessness as well. This analysis attempts to measure the dollars and cents associated with the…

How Integrating Government and Community-Based Services Can Help County Residents Thrive

The Ventura Project to Support Reentry provided high-quality, individualized services—many delivered within the context of a global pandemic—to 346 participants on formal probation in Ventura.